Multi Window 3D Interaction

Recently, my friend tagged me on this 🐦 twitter/𝕏 thread.

It features a 3D model being moved across a single window and its aware of other windows containing models. We can see the models in different windows interact with each other before and when they intersect with each other.

I found this really exciting and I decided to have a go at building it.

Here’s the final result:

I figured I could decompose what was happening in the scene into the following steps:

- Each window spawns a 3D model

- Each open window is aware of its position in the screen

- Each open window is aware of the position of every other open window on the screen

- Each open window is aware of when it intersects/overlaps with another open window on the screen

Finally, each window is aware of the position of each model in every other window, and can render the models in the same position in its own 3D scene.

With that decomposed into steps, we can set out to build the scene. I’ve used React Three Fiber to build the 3D scene, as it provides flexible APIs for working with Three.js in React.

1. Spawn a 3D model when the window loads

Each window renders its own 3D model. We’ll be loading the 3D models and rendering them using Points and a BufferGeometry.

I’ve decided to render the models using Points as these have a pleasing aesthetic.

BufferGeometry allow us to define custom geometries, where we can specify the position of each vertex, which gives us a lot of flexibility as each vertex’s position can be animated independently of the others.

The models will be loaded as GLTF files using the GLTFLoader utility function from Three.js.

When the model is loaded, we’ll sample the surface of the model’s geometry to extract the positions of the model’s vertices.

We’ll use the MeshSurfaceSampler utility class from Three.js for sampling the model’s geometry.

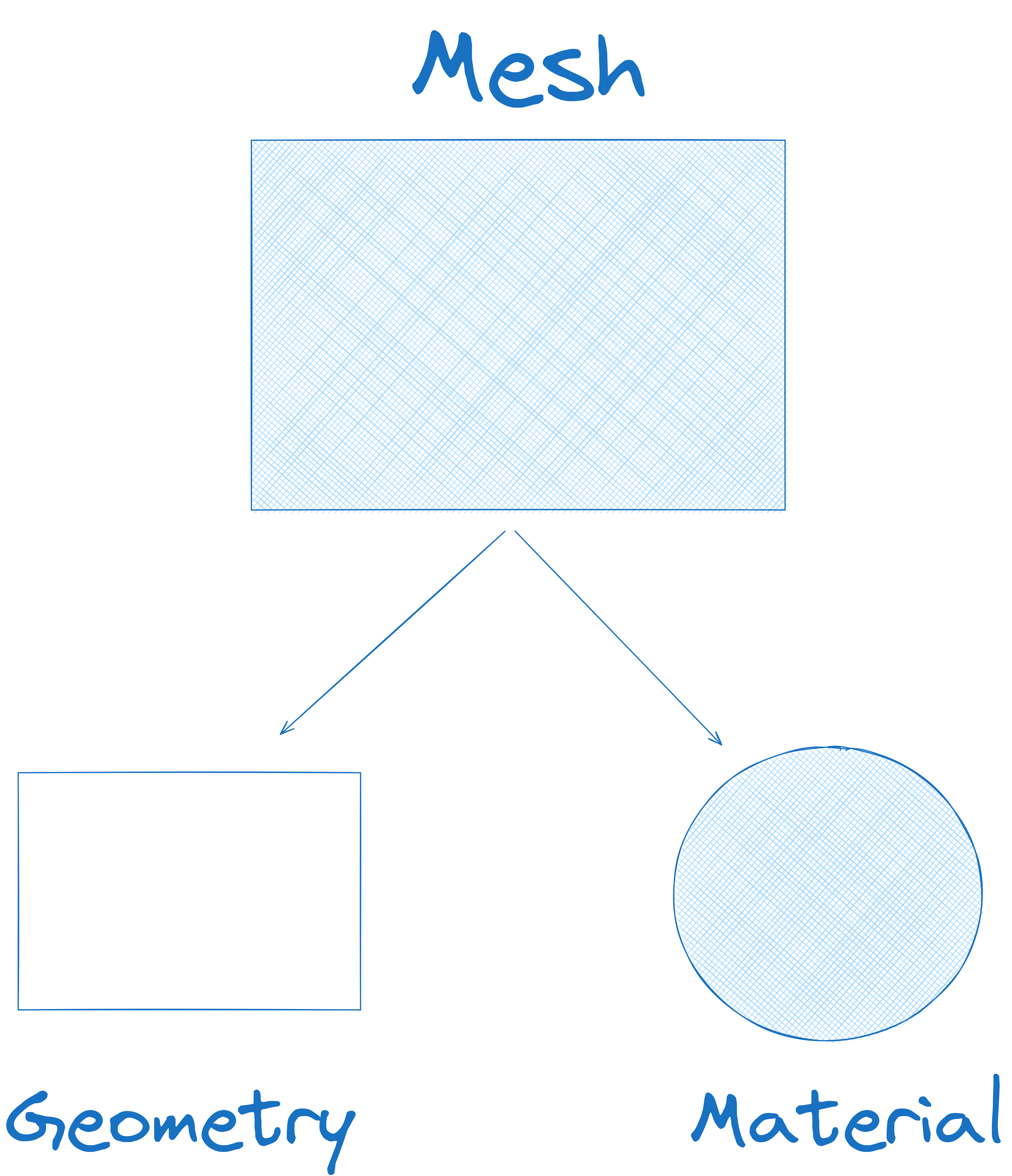

A 3D model (mesh) is made up of a geometry (the shape of the model) and a material (the appearance of the model).

The shape of a 3D model is determined by the position of its geometry’s vertices. Once we’re able to extract the position of the model’s vertices, we can “re-draw” the model using our own BufferGeometry.

A given model could be be made up of multiple meshes, which when combined together result in the final model. For example, with a 3D model of a bird, the wings, head and beak could all be separate meshes. In these cases, we’ll want to iterate over all the meshes in the model, and then extract their Geometry. Once the Geometry from each mesh has been extracted, we can them combine them into a single BufferGeometry which we can then sample.

const gltf = useLoader(GLTFLoader, modelPath);

const modelVertices = useMemo(() => {

// The gltf object contains a number of nodes, some of which are meshes.

// We want to filter for the meshes and get their geometries

// so we can merge them into one geometry, which we can then sample

const nodes = Object.values(gltf.nodes);

const geometries = nodes.reduce<THREE.BufferGeometry[]>(

(accumulator, currentValue) => {

const objectIsDefined = !!currentValue;

if (!objectIsDefined) return accumulator;

const isNonMeshObject = !currentValue.isMesh;

if (isNonMeshObject) return accumulator;

return [...accumulator, currentValue.geometry];

},

[]

);

if (geometries.length === 0) {

return [];

}

const mergedGeometries = BufferGeometryUtils.mergeGeometries(geometries);

const mergedMesh = new THREE.Mesh(

mergedGeometries,

new THREE.MeshBasicMaterial({

wireframe: true,

color: new THREE.Color("red"),

})

);

const sampler = new MeshSurfaceSampler(mergedMesh).build();

const sampleCount = 4000;

const sampleVector = new THREE.Vector3();

const vertices = new Float32Array(sampleCount * 3);

for (let i = 0; i < sampleCount; i++) {

sampler.sample(sampleVector);

vertices.set([sampleVector.x, sampleVector.y, sampleVector.z], i * 3);

}

return vertices;

}, [gltf]);

Once we’ve gotten the vertex positions from the model, we can render a BufferGeometry with Points based on the positions.

2. Each open window is aware of its own position in the screen

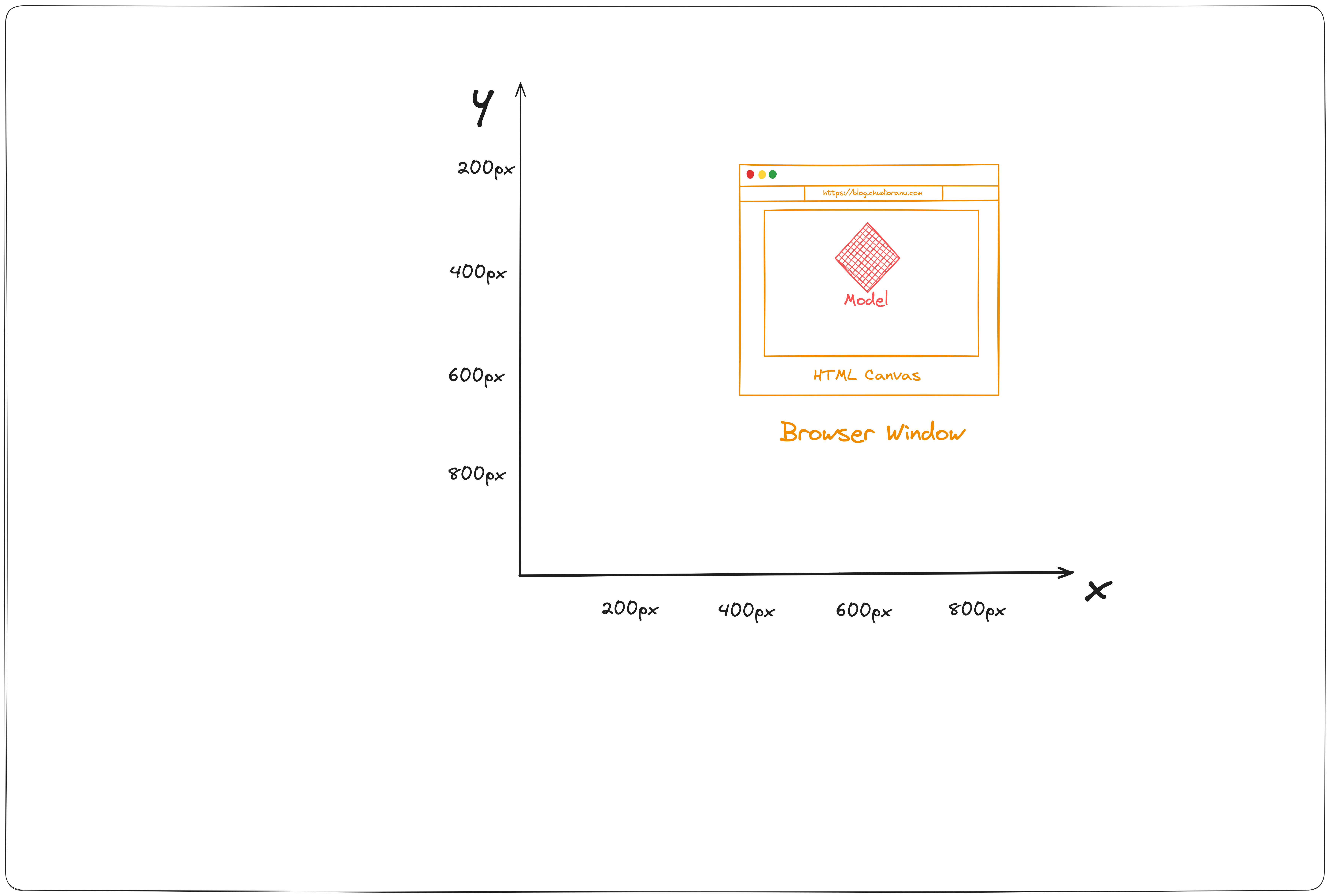

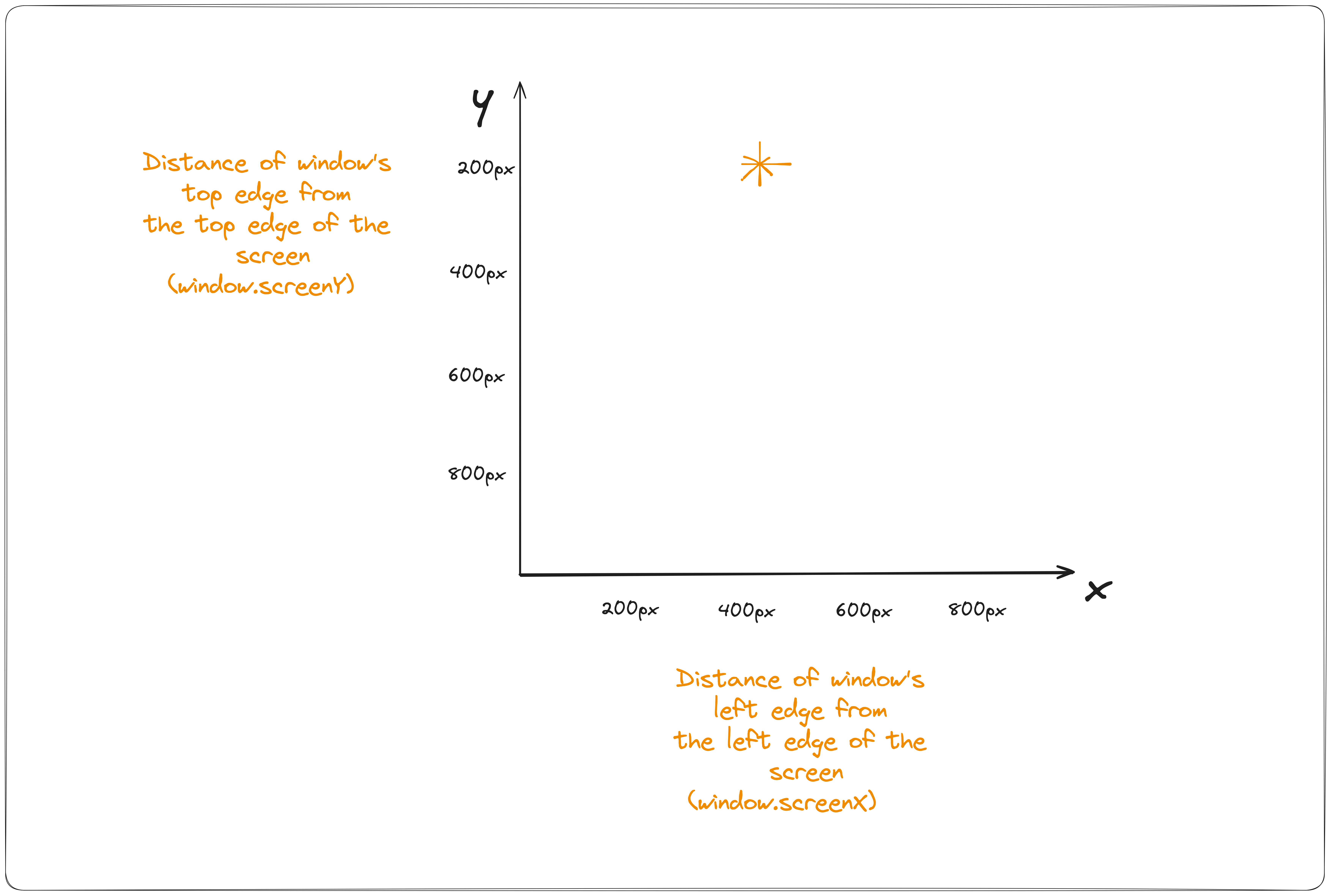

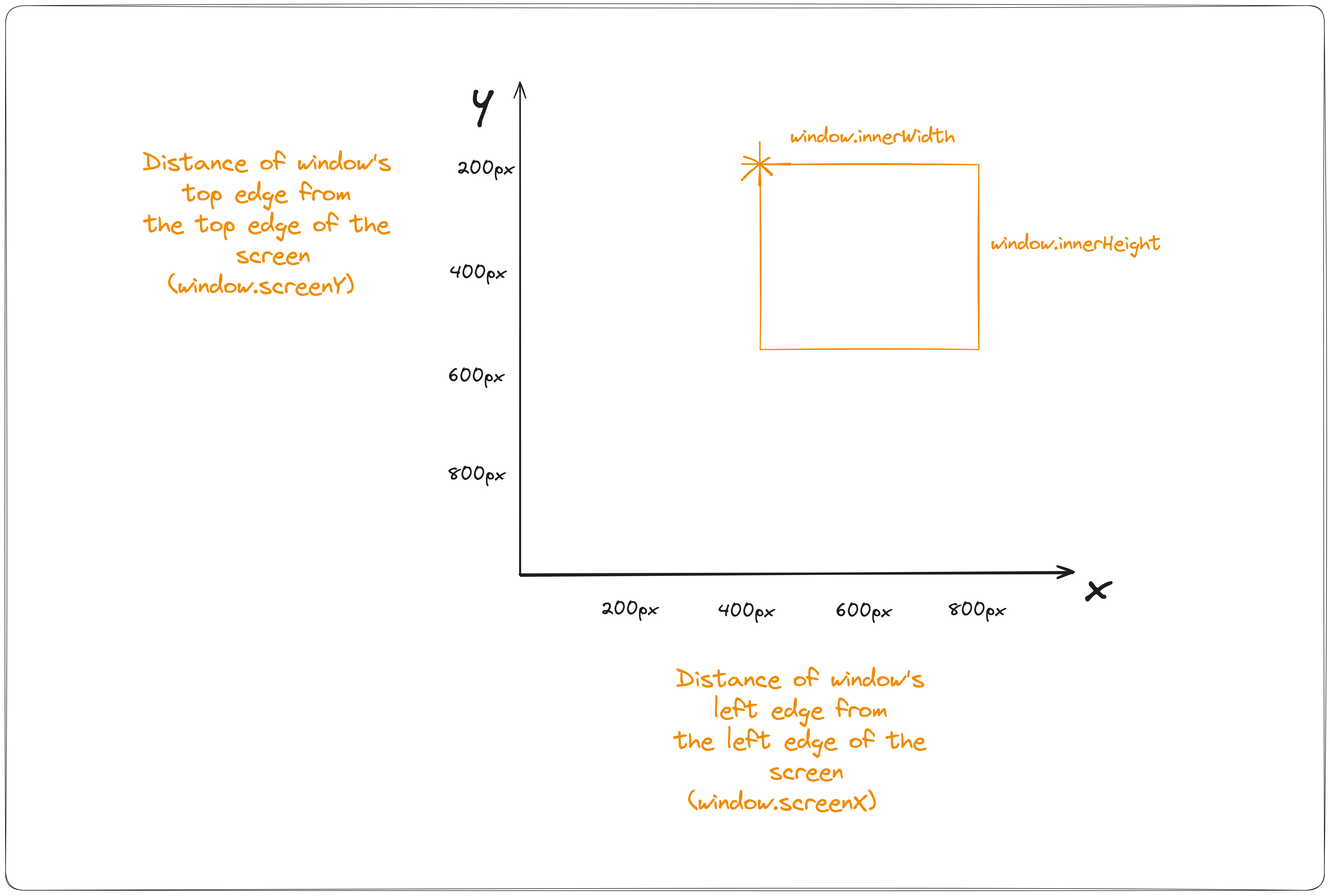

A given window needs to be able to tell its own position. We can visualize each window as a box which has a width and a height and is positioned in a specific place within the screen’s cartesian plane.

We can compute details of this box with the window.screenX and window.screenY window global values, which return distance in CSS Pixels of the position of the window’s left corner on the X axis and the window’s top corner on the Y axis.

While this provides us with the X, Y co-ordinates for the box, we still need to be able to get the complete width and height of the window, in order to build out the box.

We can derive this with window.innerWidth to get the window’s width and window.innerHeight in order to get the window’s height.

With this we can draw the window as box positioned in a specific position on the screen

With the above values computed, we can now treat the window as a box positioned in a specific position of the screen’s cartesian plane.

With the above values computed, we can now treat the window as a box positioned in a specific position of the screen’s cartesian plane.

We’ll run this calculation when the window mounts and we’ll store this value in the World component’s React state.

We’ll also use an interval loop to continuously check the position of the window, to detect if the position or size of the window has changed since the last interval run. We’ll update the World component’s React state.

3. Each open window is aware of every other open window and its position on the screen

Open windows communicate with each other via Local Storage. Each time a World component in a given window updates its React state, we’ll update Local Storage with the current values. By using listeners for Local Storage updates on each window, we can publish and subscribe to window position/size updates from every open window running the app.

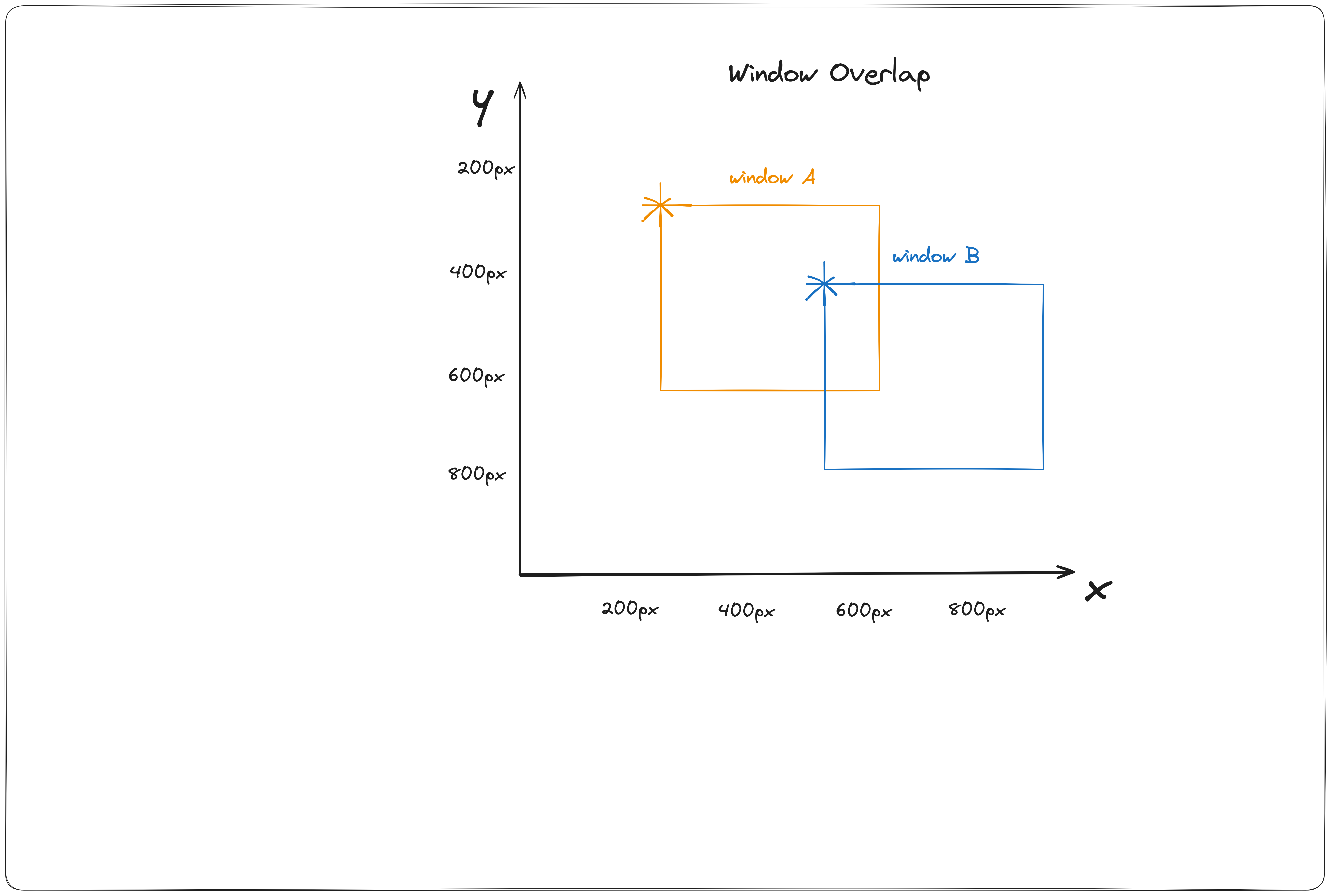

4. Each open window is aware of when it intersects/overlaps with another open window on the screen

Once the windows are aware of the position and size of each other, we can easily detect when they intersect/overlap by comparing their co-ordinates.

export function doRectanglesHaveOverlap(

firstRectangle: Rectangle,

secondRectangle: Rectangle

) {

return (

firstRectangle.left < secondRectangle.right &&

firstRectangle.right > secondRectangle.left &&

firstRectangle.top < secondRectangle.bottom &&

firstRectangle.bottom > secondRectangle.top

);

}

This approach employs a well known method for 2D box collision used in game development. Open GL Reference: https://learnopengl.com/In-Practice/2D-Game/Collisions/Collision-detection

The function arguments are commutative i.e changing the order of the arguments doesn’t change the result, so it will return the same result when called from different windows.

With that logic defined, we can render models in peer windows as such:

Live Demo notes: Begin by opening the demo in a single window. Then, open additional windows of the same link to fully engage with the multi-window 3D interaction. You can position these windows side-by-side or overlap them on your screen.

Closing Notes

I had a lot of fun building this. There are a few things I’d like to improve on.

We aren’t taking the model’s position into account, causing the peer models to be rendered in arbitrary positions. As a follow-up, we could do the following within each window:

- Determine the position of its owned model on the canvas and on the screen.

- In order to get the owned model’s position on the screen, we’ll sum the x,y offset co-ordinates from the top left corner of the screen for the browser window, the canvas, and the model.

- This will then be synced across each window via local storage.

- Render each peer window’s model in its equivalent 3D position in the canvas

This would look something like this: